This post explores some techniques I’ve been using to improve the security of some services on my home network and make it easier to recover them in the event of hardware/other disasters. Below, I’ll describe how and why I’m moving more and more services onto virtual machines (VM). This is better for security because you can pretty much bet that an attacker that exploits vulnerabilities in a VM probably won’t be able to do more than compromise the VM itself (not the whole host server). And at least with my Synology server, full “bare metal” backups of the VMs are supported, including the ability to cluster servers so as to make switchover or failover possible with just a few minutes of down time. This can make virtual computers a lot more recoverable and relocatable when compared to actual hardware.

I’m going detail below some of how I’m managing this with a couple VMs I have deployed on a cluster of (two) servers. The details of how I do this on a Synology NAS are pretty specific to that hardware – the concepts are not.

Highlights of this framework include:

- Packaging services in a VM contains the scope of the damage when the “server” is compromised.

- Clustered hosts make it easy to move VMs to a new host or failover the VM if its host server is down.

- Snapshots of VMs can be created instantly as scheduled and then replicated to other hosts in the cluster.

- VMs can be exported to an external file system for off-site backup

How I put the pieces together

So much for the abstract, see below I’ll show you how I put this architecture together on my home network, clustering two servers that share two virtual machines.

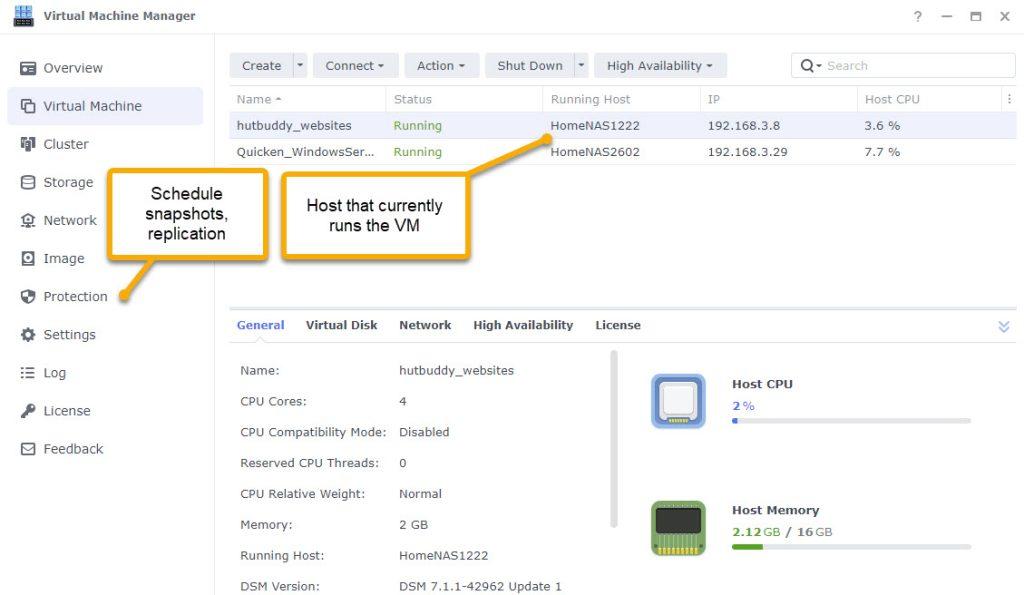

The purpose of the virtual machines is not hugely relevant but as you’ll see in the screenshots here, the two virtual computers I have are hutbuddy_websites and Quicken_WindowsServer. The first is a virtual computer that runs a copy of Virtual DSM and hosts a few websites on my network. Websites can be notoriously vulnerable to attack. While I’m careful with security at those sites, it’s good to know that if the whole server went down it would still be only those websites and not my whole network. The second VM is something I use for running Quicken on a virtual Windows machine.

Now let’s start with VMs that exist, but they aren’t protected like I’ll outline. On a Synology server and many others, backing up virtual computers can get tricky and some of it gets downright philosophical with certain camps touting that you should just backup the VM from within the VM itself. Yeah that’s possible but recovering from a disaster requires rebuilding that VM from scratch starting by installing an operating system. It’s going to take hours with anything complex, and maybe days. I’m not settling for that because I don’t have to…

Clustering virtual computers

The redundancy starts by clustering hosts that each share the same virtual machines. Only one host at a time is designated to be the one that runs a given VM. But with a simple action in the Protection Plan it is possible to move the VM to another host, either for better loading or because a host is down. Note that on a Synology clustering requires a Virtual Machine Manager Pro license.

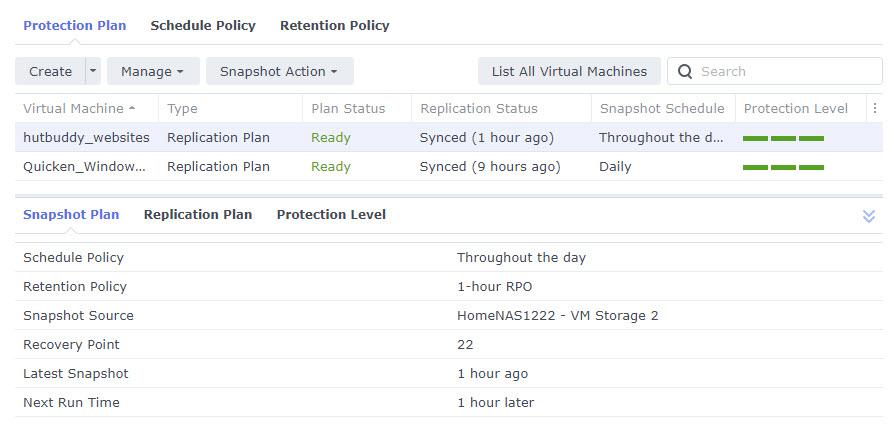

The key to redundancy is in the Protection Plan you choose for the VM. By clicking on Protection you get to this console.

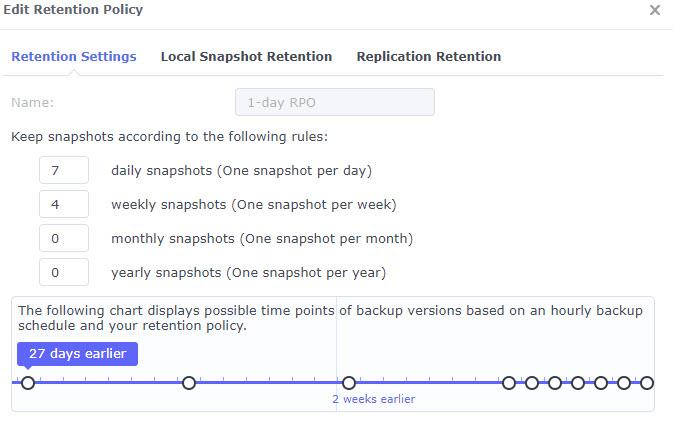

In the protection plan you’ll schedule snapshots. A snapshot is a complete copy of the state of the virtual computer. Snapshots can be taken while the VM runs as filesystem-consistent snapshots at a point in time. Then you define a Retention Policy that says exactly when you want to release the space for old snapshots.

In the example policy above, the system retains snapshots for the last week and then keeps one snapshot per week for the last month.

Now that sounds like a lot of diskspace. My websites VM takes up about 250GB and I’m storing 15 or so copies of that?? Not really. Snapshots take advantage of the BTRFS file system and only store deltas. What it does mean is (unless you manually delete snapshots which you can do) if you delete a bunch of stuff it doesn’t go away immediately. That’s usually a good thing!

The outcome of clustering hosts like this is that if a host goes down, I can failover its VMs to the other host in just a few minutes. And if the VM crashes/other then I can restore from a snapshot made at various times that day, or less frequently for up to a month.

What’s missing?

OK so now we have two host servers that can each separately run the very same virtual machines. Not just sort of the same, but the same all the way down to the full content of the file system, the MAC address, everything. If a server goes down then I can almost instantly boot the VMs it hosted and they’re completely back in operation.

The only remaining problem is what if I lose both servers?? The two servers are in physical proximity. Theft, fire, or other might mean that both servers go down perhaps permanently. Obviously I won’t recover from that in just a few minutes, but the real problem is the fact that the servers were replicating snapshots to each other so now ALL the snapshots are gone!

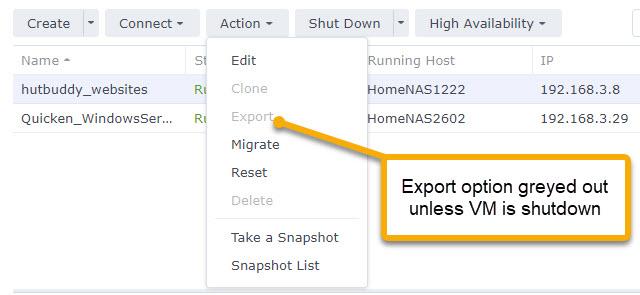

One solution to this problem would be to periodically export the VM to a file. This is NOT a “snapshot” with only deltas, it’s a great big file that’s the whole state of the VM and everything in its internal file system.

The problems here are two-fold. For one thing, the export of a big VM might take several hours and the whole time it exports, you have to have your VM shutdown/offline. The other problem is that this is a manual action! I’m loath to have manual procedures that I can automate. But can I?

At first it seems like we’re stuck here – and that’s indeed where I stayed for months. But ultimately I got some help from a friend at reddit and found this German website that details a solution that includes using an internal utility we find in DSM (good thing google translates).

(I’m fine with using this even though not publically documented – be your own judge)

See my SSH session below (run it with root privilege i.e. sudo -i)

/volume1/@appstore/Virtualization/bin/vmm_backup_ova --help

Usage: /volume1/@appstore/Virtualization/bin/vmm_backup_ova [--dst] [--batch] [--host] [--guests] [--retent] [--retry]

backup VM to shared folder on VMM

Options:

--default use default options to backup

--dst shared folder path for storing backup OVA

--batch the number of VMs exporting at a time (default: 5)

--host|--guests mutually exclusive options

'--host' only backup VMs which repository is on the specified host (default: all)

'--guests' only backup specified VMs (default: not specified, use | for seperator if there are multiple targets)

--retent the number of backups for retention (default: 3)

--retry the number of times for backup retrying (default: 3)

Examples:

Run backup script by default

./vmm_backup_ova --default

Backup all guests which repository is on the host and store OVAs in certain shared folder

./vmm_backup_ova --dst=<share-name> --host="<host-name>"

Backup all guests which repository is on the host and limit the number of VMs exporting at a time to avoid affecting performance

./vmm_backup_ova --batch=2 --host="<host-name>"

Backup certain guests and store the last two OVAs per VM

./vmm_backup_ova --guests="<guest_name_1>|<guest_name_2>" --retent=2

root@HomeNAS2602:~#

The vmm_backup_ova utility is the cat’s meow here. I launch the program with a ssh script that reads as follows:

# clone/export VMs on this host for disaster recovery #!/bin/bash set -e /volume1/@appstore/Virtualization/bin/vmm_backup_ova --dst=VMBackups --host="HomeNAS2602" --retent=1

In this case I’m telling vmm_backup_ova to export every VM running on that host and store the export in a shared folder called VMBackups and retain only one backup. A key advantage of this utility is that we do NOT have to shutdown the VM! Instead, vmm_backup_ova starts by making a temporary clone of the running VM, which happens in nearly an instant. Then it proceeds to export that clone (which is never run) while the real VM continues to run. The export of a large VM might take several hours, but it runs in the background while everything else continues to function and then the clone VM is automatically deleted.

Tip: Avoid spaces in your virtual computer names. My experience is the utility creates destination directories with the wrong names and then can’t populate them. See my use of underbars instead.

In practice I run a script like that on each of the two hosts. It’s nice that in the GUI of Virtual Machine Manager I can see and monitor the snapshot/export process even though I didn’t initiate it there. And although each NAS exports to its own file system, the VMBackups shared folder is replicated to the other host too via ShareSync, and the Hyper Backup program is used to make off-site copies of VMBackups. Finally, the VM backups share itself gets snapshot retaining content for up to a month (I snapshot nearly everything to protect it from ransomware if nothing else).

I’m currently exporting once per month as scheduled in the Task Scheduler. So if I lost BOTH hosts then I can still recover the VM from the latest export (with some hardware of course), then restore VM files from within the VM itself, as I’ll typically have made more recent file backups and not have to revert all the way back to the last export once I’m all done.