For all the docker users out there, I thought I’d share a couple points about managing docker containers on your home server. These are important security issues that get commonly missed. The simple examples you see on the internet for installing docker containers won’t usually mention these things. But they might save your whole system from being shutdown by malware/ransomware.

What are these protections and why are they necessary? First let me cover a little background on how docker containers work. The code in the container executes as part of the docker engine. The docker engine by necessity, executes with root privilege and can therefore read or write any data in the file system whatsoever. To cause damage, malware in the container need only successfully submit a request to delete critical system files etc.

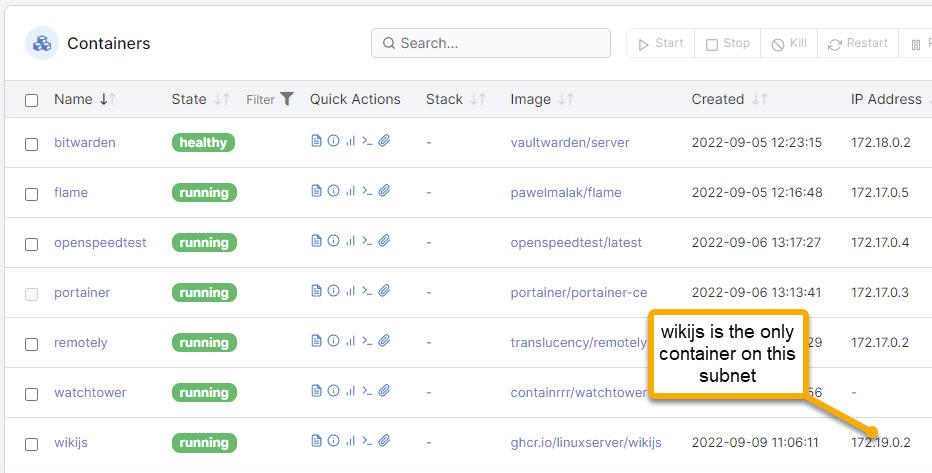

In addition to damaging the file system, containers can also carry out network attacks on other containers on your server. Containers normally run in the default bridge network. Being on the same subnet, the docker engine makes the containers visible to each other by name. So containers can discover other containers and get their IP address thru DNS. The requests they send to each other may be malicious and won’t be blocked by any firewall since they occur within the same docker subnet (which is not a real network – it’s a virtual LAN in the engine).

I recently went digging in this area when I got interested in installing the wiki.js container on my system to hold a wiki site. Wiki.js is a fully fledged web site/web publishing framework. Its JavaScript architecture and interfaces make it particularly susceptible to injection attacks. There’s also a history of quite a few bugs, and I’m not sure the codebase is clean of malware or poor security practices. That might be a reason to have second thoughts about using it all, but IMO that’s a little drastic if things are managed well.

But these concerns did spur me to learn about some controls that can be put in place, and how to use them. What I’m looking for here is to see that the attack surface within the docker engine, is limited to the wiki.js website itself – not my whole server. This means that an attacker might bring down wiki.js and might gain access to any information that’s been published there. But potentially numerous other services like my password manager, websites/sql databases, financial software, online movies, etc. remain unaffected.

Isolate Docker Containers

Docker provides a couple of ways to manage container security. You just have to make a point to use them when you have reason to be concerned about what a container might do (like uh…all the time I should have been doing this all along).

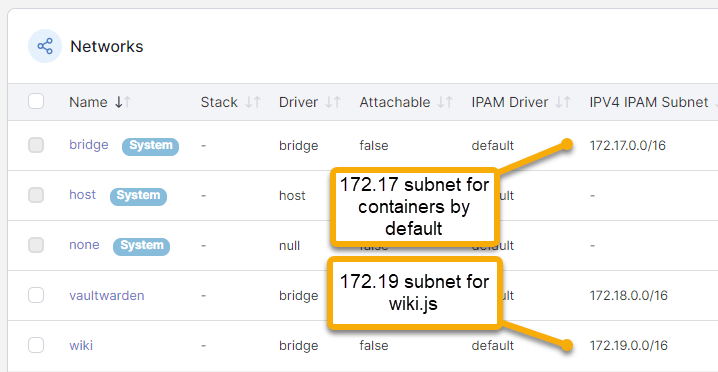

- Give the container its own network. Most people install containers on the default bridge network by simply not specifying otherwise. So usually examples you find on the web try to keep things simple and leave this out. Alternatively, you can isolate your container on its on network and this means it has its own subnet. Now, even if your container magically knew the IP address of another container, it would not be able to send it anything. The docker runtime would not route the request. This is why docker has this facility and why you should use it.

- Limit the logical file-system privilege of the container. As mentioned, the docker runtime runs with root privilege. That would seem to drive a nail into the coffin, for any goal of seeing your container have limited privileges as it executes file system code. But docker has a facility to address just this concern, that being the PUID/PGID arguments that tell docker to execute container requests as-though the request were executed by a specific user. So barring some kind of zero-day vulnerability in the runtime, this goes a long way to limiting the damage done by ill-formed or ill-intent code. Again, you don’t usually see these arguments getting used. They won’t protect you unless you use them.

How I went about container isolation by example

The details of applying the above docker facilities are system specific when you look at the details. But similar steps will apply regardless. In a broad sense, the problem is that of creating a dedicated bridge network for the container and then use that. Then also limit the file system privileges.

These are the specific steps I took to deploy the wiki.js container on my DS-1520+ NAS. There are lots of ways of doing the equivalent things, this is just a by-example for the steps I took based on what’s easy and familiar to me.

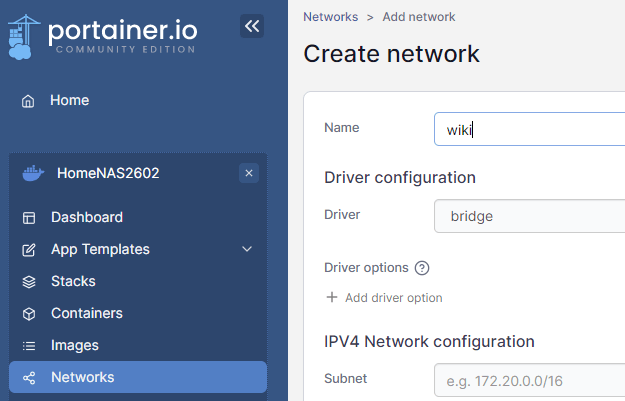

The first thing I’m going to do is create a network that I’ll call “wiki” where I’ll isolate the wiki.js container. I do that by running portainer, select my host and go to Networks and click on Add. Fill in the name of the network as “wiki”. Confirm the Driver is “bridge” and accept defaults on everything else and save this as a new network.

Now you can see your new network that’s setup on its own subnet.

With the network ready, I’ll now setup a user account for limiting the container’s privileges.

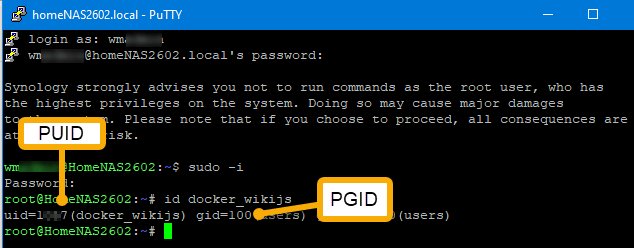

Start by creating a system user. On my system I just went to the Control Panel and setup a new user I call “docker_wikijs”. This user has file system privileges where the only directory it has any access to whatsoever, is the shared folder where the wiki.js maintains all its settings and data.

Getting the PUID/PGID takes executing the linux id command. If you’re comfortable with using SSH and you have SSH enabled on your server etc. then you can open a SSH prompt and get the output as shown by this example where I execute “id docker_wikijs”.

So what if you’re NOT so comfortable with SSH and you don’t have it setup? Well on a Synology don’t despair. You can actually execute the id command by setting up a task to do that in the Control Panel. The output will come to you as email. See this easy guide on doing that. (by the way you can use this same trick to execute any task such as docker run as root, just know that you need to take proper care doing so)

So take the uid and gid values that come from the id command and that’s all you need for making PUID and PGID arguments for the docker run command.

Having prepared the shared folders that wiki.js specifically wants, now I’m ready to execute docker run to install the container. See the following docker run command with highlighted arguments that isolate the container.

docker run -d --name=wikijs \

--network=wiki \

-e PUID=<uid value> \

-e PGID=<gid value> \

-p 3540:3000 \

-e TZ=America/New_York \

-v /volume1/docker/wikijs/config:/config \

-v /volume1/docker/wikijs/data:/data \

--restart always \

ghcr.io/linuxserver/wikijs

The network argument naturally puts the container on that bridge instead of the default. The PUID and PGID arguments look just like simple environment variables, but the docker runtime picks up on these and quietly applies those privileges.

Like anything though, test it out. For example reduce the user to read-only privilege and observe the wiki website failing to save files when you tell it to.

I execute the above docker run and then go to portainer and find wikijs installed as requested. 🙂