To catch you up a little, I recently added a Synology DS1520+ to my home network. I’ve moved at least most of the services that were on my DS918+ onto this new server. In the process I’m learning how to setup a level of redundancy so if one server goes down, I can continue with the other server instead of having nothing till I repair it and recover data etc. Now, I’m not talking about a magical auto-failover where it all happens without my involvement. But I AM talking about being able to connect to the good server and tell it to take over various services and then recover things at-most losing the last hour of work posted before the server went down.

The ability to failover services is not where this starts or even what I find to be the coolest about this setup. To do a failover first you need the right data to be on the remaining server. That’s where the BTRFS (pronounced butter-fuss) file system comes in. This is an amazing file system that manages file-versioning and instant snapshot capabilities with virtually no interruption to server operation. Synology servers have packaged this and various utility programs making it all work together nicely with no need to face SSH command lines etc.

In my case this results in all of the data on one server getting mirrored onto the other server. Pulling that off hourly, not significantly interrupting services, and reliably using the data to recover after a server goes down calls for a special kind of architecture – we’re talking about many thousands of potentially interrelated files. For example assume there’s an “index” file that lists some other files by name. If you replicate the index last while copying hundreds of files to another server then maybe you get the updated index but not the file it refers to or visa versa. Much worse integrity issues might come up backing up SQL databases while being updated etc. The data does not have “integrity”. When you recover a system with that data the software might misbehave or totally crash.

Snapshots to the rescue…BTRFS prevents that kind of thing. Taking a snapshot of a directory on the hard disk allows you to later refer to what that directory used to look like at that moment in time. So if you take a snapshot at 9 a.m., then you can spend however long it takes to replicate that snapshot to another server or back it up to a USB etc. When you get done you won’t have a jumbled up mix where some of the files are dated 9:15 or 9:20 for example and got copied, but other files in that same time range are just missing.

That’s all great but what does it all mean to system performance?? That’s the coolest part of the show! The ambition to protect data integrity doesn’t require much creativity. Doing it without compromising speed is what does. Taking the snapshot is something that happens in an instant, and in itself consumes no significant space on the hard disk. No data gets copied when you take a snapshot. Instead the OS “drives a stake in the ground” and says “starting now, if you want to save some data in a file that’s going to overwrite data that existed before 9 a.m., I’m just not going to do that and will instead store it elsewhere…”. In other words, what has happened is not a bunch of I/O and processing, it’s more just a statement of policy…the policy of Copy On Write (COW).

The policy of COW means that based on timestamps (just a number) stored on sectors in the file system, the code is triggered to allocate new space to the file if necessary to preserve some other data that has been snapshot. The COW policy is managed such that only modified sections of a file are stored on separate disk sectors. Let’s say the file is a 100KB log file and a few lines of text get appended to it. The result of COW is that now there are logically two 100KB files on the hard disk that look slightly different at the end – the reality of it though, is that the file exists only once on the hard disk. When files get modified instead of appended to, the modified sections are stored separately – but only those sections. Worst-case, the entire file gets modified and it’s only then that you actually duplicate all the storage (because COW had to find new space for everything in the whole file). Other nice side effects of COW put to use includes the ability to make instant copies of large files if they’re on the same volume. Not much need be done at the time of the copy request. I/O happens only later when one of the files gets written to and COW may then allocate more space to a file so it’s content can actually be unique.

The problem of retention

So we’re making snapshots to lay the groundwork for replication. But eventually the OS is going to run out of hard disk space if we keep the snapshots forever. But even if we never replicate snapshots, they still have value as a historical record of the file system that we can recover to/from – if nothing else to recover from mistakes. Or ransomware attacks.

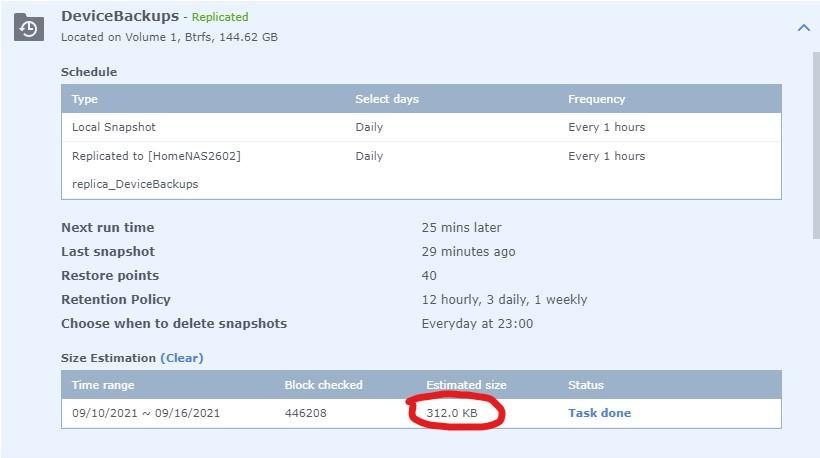

Here’s how the space gets managed so well with BTRFS. Let’s take an example. See in the image above how our Device Backups directory has 40 “restore points”. That means that there are 40 earlier versions of this 150 GB directory. That sounds like it could be a lot of space…but how much?

Opening up detail on this snapshot in the DSM GUI I see this information:

The key thing about keeping the space manageable is setting the retention policy. We see above, that even though we have 40 versions of a 150GB directory, the actual “wasted space” (data that’s not in the very latest snapshot) is only 312KB or 0.2% of the total space! In other cases where files are getting modified more substantially, I might see numbers more like 5%. It just depends. You do have to manage it though – make your retention policy keep the old snapshots around much longer, and the space will stack up.

The Retention Policy is separate for every directory you want to snapshot. The settings above mean that I can recover from any hourly snapshot among the last twelve. Or I can backup to a snapshot at the end of any of the last three days, or at the end of the last two weeks. Once the data gets older than that, the space gets reclaimed and you can’t get that data back unless you have backups etc.

I’ve used this a few times to recover data. It’s as simple as browsing the snapshot and copying files from it or just restoring the whole snapshot (while optionally creating a new snapshot just in case).

Failover

There are some truly automatic failover capabilities in the Synology product line but the servers have to be completely identical and one of them is just on stand-by. That’s not my setup, and failover is less than automatic. But it’s just me – I’m not complaining.

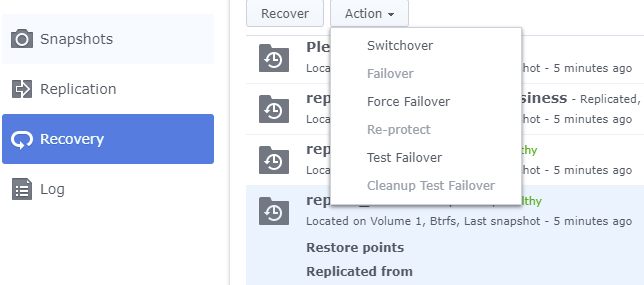

To failover services one option I have is to go to the snapshot on the good server (i.e. the replica of a directory that WAS on the bad server). The snapshot replication tool gives me some options for recovery of that snapshot as shown here…

There are various items to choose from including testing a failover, switching servers when neither one failed, etc. See the Synology documentation for more on using snapshot replication. What happens when you failover a directory includes marking it as read-only on the original server (if is alive) and then the read-only replica on the good server gets marked read-write. Seeing that services are in place to process that data can be its own problem – there’s no silver bullet one size fits all that handles every situation painlessly. But the Synology snapshot tools bring to the masses, some failover capabilities that have only been for a commercial audience in the past.

But wait there’s more – deduplication too…

I almost forgot…among the beautiful outcomes of software that’s designed right, is that exactly the same software delivers multiple functional benefits. We’ve talked about data integrity on snapshots, and saving efficient deltas that consist only of modified blocks in the files, instantly copying files regardless of how big they are, but there’s also the process of deduplication that virtually falls out of this architecture as a “simple” batch process with very high value when it comes to saving disk space. This is a whole lot like data compression, but with none of the runtime overhead of reading truly compressed data.

Deduplication means there’s no duplicate files. Or more accurately there’s not even duplicated blocks of a file. If you step back and look at the whole file system, or even step further back and consider multiple volumes on separate hard disks, the data can be managed so that the same blocks of data are stored only once! Take a classic example where this is HUGE…let’s say I have multiple directories that store complete backups of workstations in my home (as I do per use of Active Backup for Business). A big part of backing up a workstation is just backing up lots of program images including the Windows operating system itself. So if I backup five workstations, does that mean the server has five different backups including all of Windows? Not necessarily. In my case the backup software exploits the ability of BTRFS to track signatures on every block of data and avoid duplicating the same blocks regardless of now many “logical” copies of it exist. Later on if files get modified, new space naturally gets allocated and data copied, because that’s just how Copy On Write works.

Deduplication does have a processing load and needs to be executed as a batch process against specific directories. For me that just amounts to letting the Active Backup software take care of this and I just enjoy the benefit 🙂